Welcome to the world of data ingestion, a pivotal process in modern data architecture. Data ingestion seamlessly transfers data from diverse sources such as databases, files, streaming platforms, and IoT devices to centralised repositories like cloud data lakes or warehouses. This critical process ensures that raw data is efficiently moved to a landing zone, ready for transformation and analysis.

Data ingestion occurs in two primary modes: real-time and batch processing. In real-time ingestion, data items are continuously imported as they are generated, ensuring up-to-the-moment analytics capability. Conversely, batch processing imports data periodically, optimising resource utilisation and processing efficiency.

Adequate data ingestion begins with prioritising data sources, validating individual files, and routing data items to their designated destinations. This foundational step ensures that downstream data science, business intelligence, and analytics systems receive timely, complete, and accurate data.

What is Data Ingestion?

Data ingestion, often facilitated through specialised data ingestion tools or services, refers to collecting and importing data from various types of sources into a storage or computing system. This is crucial for subsequent analysis, storage, or processing. Types of data ingestion vary widely, encompassing structured data from databases, unstructured data from documents, and real-time data streams from IoT devices.

Data ingestion vs data integration focuses on importing data into a system, while data integration comes with combining data from different sources to provide a unified view. An example of data ingestion could be pulling customer data from social media APIs, logs, or real-time sensors to improve service offerings.

Automated data ingestion tools streamline this process, ensuring data is promptly available for analytics or operational use. In business management software services, efficient data integration ensures that diverse data sets, such as sales figures and customer feedback, can be harmoniously combined for comprehensive business insights.

Why Is Data Ingestion Important?

1. Providing flexibility

Data ingestion is pivotal in aggregating information from various sources in today’s dynamic business environment. Businesses utilise data ingestion services to gather data, regardless of format or structure, enabling a comprehensive understanding of operations, customer behaviours, and market trends.

This process is crucial as it allows companies to adapt to the evolving digital landscape, where new data sources constantly emerge. Moreover, data ingestion tools facilitate the seamless integration of diverse data types, managing varying volumes and speeds of data influx. For instance, automated data ingestion ensures efficiency by continuously updating information without manual intervention.

This capability distinguishes from traditional data integration, focusing more on comprehensively merging datasets. Ultimately, flexible empowers businesses to remain agile and responsive amid technological advancements.

2. Enabling analytics

Data ingestion is the foundational process in analytics, acting as the conduit through which raw data enters analytical systems. Efficient facilitates the collection of vast data volumes and ensures that this data is well-prepared for subsequent analysis.

Businesses, including those seeking to optimise their operations through business growth consultancy, rely on robust services and tools to automate and streamline this crucial task.

For instance, a retail giant collects customer transaction data from thousands of stores worldwide. Automated data ingestion tools seamlessly gather, transform, and load this data into a centralised analytics platform. This process enhances operational efficiency and empowers decision-makers with timely insights.

3. Enhancing data quality

Data ingestion, primarily through automated services and tools, enhances data quality. First, various checks and validations are executed during data ingestion to ensure data consistency and accuracy. This process includes data cleansing, where corrupt or irrelevant data is identified, corrected, or removed.

Furthermore, data transformation occurs wherein data is standardised, normalised, and enriched. Data enrichment, for instance, involves augmenting datasets with additional relevant information, enhancing their context and overall value. Consider a retail company automating from multiple sales channels.

Here, data ingestion ensures accurate sales data collection and integrates customer feedback seamlessly. This capability is vital for optimising business intelligence services and driving informed decision-making.

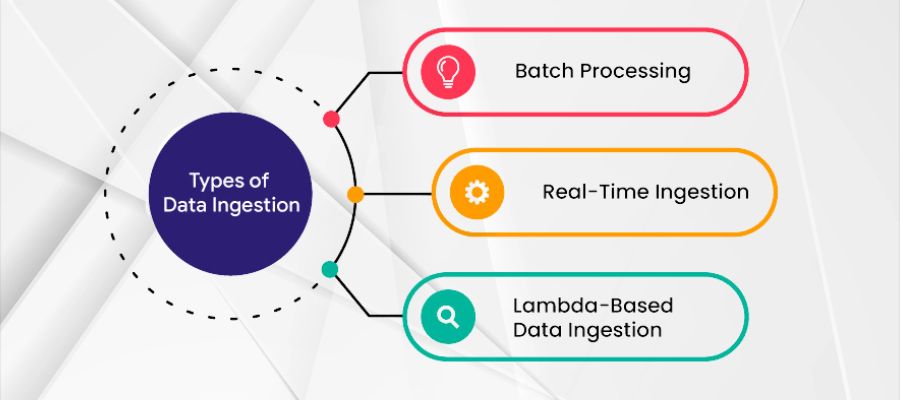

Types of Data Ingestion

1. Batch Processing

Batch processing, a fundamental data ingestion method, involves gathering data over a specific period and processing it all at once. However, this approach is particularly beneficial for tasks that do not necessitate real-time updates.

Instead, data can be processed during off-peak hours, such as overnight, to minimise impact on system performance. Common examples include generating daily sales reports or compiling monthly financial statements. Batch processing is valued for its reliability and simplicity in efficiently handling large volumes of data.

However, it may not meet the needs of modern applications that demand real-time data updates, such as those used in fraud detection or stock trading platforms.

2. Real-time Processing

In contrast, real-time processing revolves around ingesting data immediately as it is generated. This method allows instantaneous analysis and action, making it essential for time-sensitive applications like monitoring systems, real-time analytics, and IoT applications.

Real-time processing enables swift decision-making by providing up-to-the-moment insights. Nevertheless, it requires substantial computing power and network bandwidth resources. Additionally, a robust data infrastructure is essential to effectively manage the continuous influx of data.

3. Micro-batching

Micro-batching represents a balanced approach between batch and real-time data processing methods. It facilitates frequent data ingestion in small batches, which enhances near-real-time updates.

This approach is crucial for businesses seeking timely data integration without overburdening their resources with continuous real-time processing demands. Micro-batching finds its niche in scenarios where maintaining data freshness is vital, yet full-scale real-time processing could be more practical.

The Data Ingestion Process

1. Data discovery

Data discovery is the initial step in the lifecycle, where organisations seek to explore, comprehend, and access data from diverse sources. It serves the crucial purpose of identifying available data, its origins, and its potential utility within the organisation.

This exploratory phase is pivotal for understanding the data landscape comprehensively, including its structure, quality, and usability. During data discovery, the primary goal is to uncover insights into the nature of the data and its relevance to organisational objectives.

This process involves scrutinising various sources such as databases, APIs, spreadsheets, and physical documents. By doing so, businesses can ascertain the data’s potential value and strategise its optimal utilisation. Organisations often rely on project management services to streamline and efficiently coordinate these data discovery efforts.

2. Data acquisition

Data acquisition, also known as data ingestion, follows data discovery. It entails the act of gathering identified data from diverse sources and integrating it into the organisation’s systems. This phase is crucial for maintaining data integrity and harnessing its full potential.

Despite the challenges posed by different data formats, volumes, and quality issues, adequate ensures that the data is accessible and usable for analytics, decision-making, and operational efficiency. Automating data ingestion using specialised tools or services further streamlines this process, reducing manual effort and improving accuracy. Examples include ETL (Extract, Transform, Load) tools and cloud-based platforms for seamless data integration.

3. Data Validation

In data ingestion, the initial phase is data validation, a critical step in guaranteeing the reliability of acquired data. Here, the data undergoes rigorous checks for accuracy and consistency. Various validation techniques are applied, including data type validation, range checks, and ensuring data uniqueness. These measures ensure the data is clean and correct, preparing it for subsequent processing steps.

4. Data Transformation

Once validated, the data moves to the transformation phase. This step involves converting the data from its original format into a format conducive to analysis and interpretation. Techniques such as normalisation and aggregation are applied to refine the data, making it more understandable and meaningful for insights. Data transformation is crucial as it prepares the data to be utilised effectively for decision-making purposes.

5. Data Loading

Following transformation, the processed data is loaded into a data warehouse or another designated destination for further utilisation. This final step in the process ensures that the data is readily available for analysis or reporting. Depending on requirements, data loading can occur in batches or in real-time, facilitating immediate access to updated information for decision support.

Conclusion

In conclusion, data ingestion is a cornerstone of modern data-driven enterprises, enabling the normal flow of information from diverse sources to centralised repositories. This pivotal process ensures that businesses can harness the full potential of their data for strategic decision-making, operational efficiency, and actionable insights.

By adopting efficient techniques through real-time, batch processing, or micro-batching—organisations can maintain agility in adapting to market demands and technological development.

Automated tools further enhance this capability by streamlining collection, validation, transformation, and loading, thereby minimising manual effort and improving accuracy.

Moreover, data ingestion enhances quality through validation and transformation and facilitates timely access to critical information for analytics and reporting.\